-

The aim of the project is to analyze correlations between the threat status of a particular species tracked on the IUCN Red List, and their threats and stresses.

-

This repository is dedicated to scrapping the necessary datafields from the IUCN Red List to prove such correlations.

-

This project is a collaboration with Uttara Mendiratta and Anand M Ossuri from the Nature Conservation Foundation, India.

-

The

birds.csvandmammals.csvcontain the species for which the data has to be scrapped. -

The permissions of the

start.shhave to be changed before the first run of the code.user@computer:~/Red chmod +X start.sh -

The pipeline is triggered using the

start.shscript, that in-turn triggers thescraper.pycode.user@computer:~/Red ./start.sh -

The scrapped data is stored to the disc in the form of a

X_WORKING.csvfile, a copy of the original.csv, ensuring the originals are not tampered with.

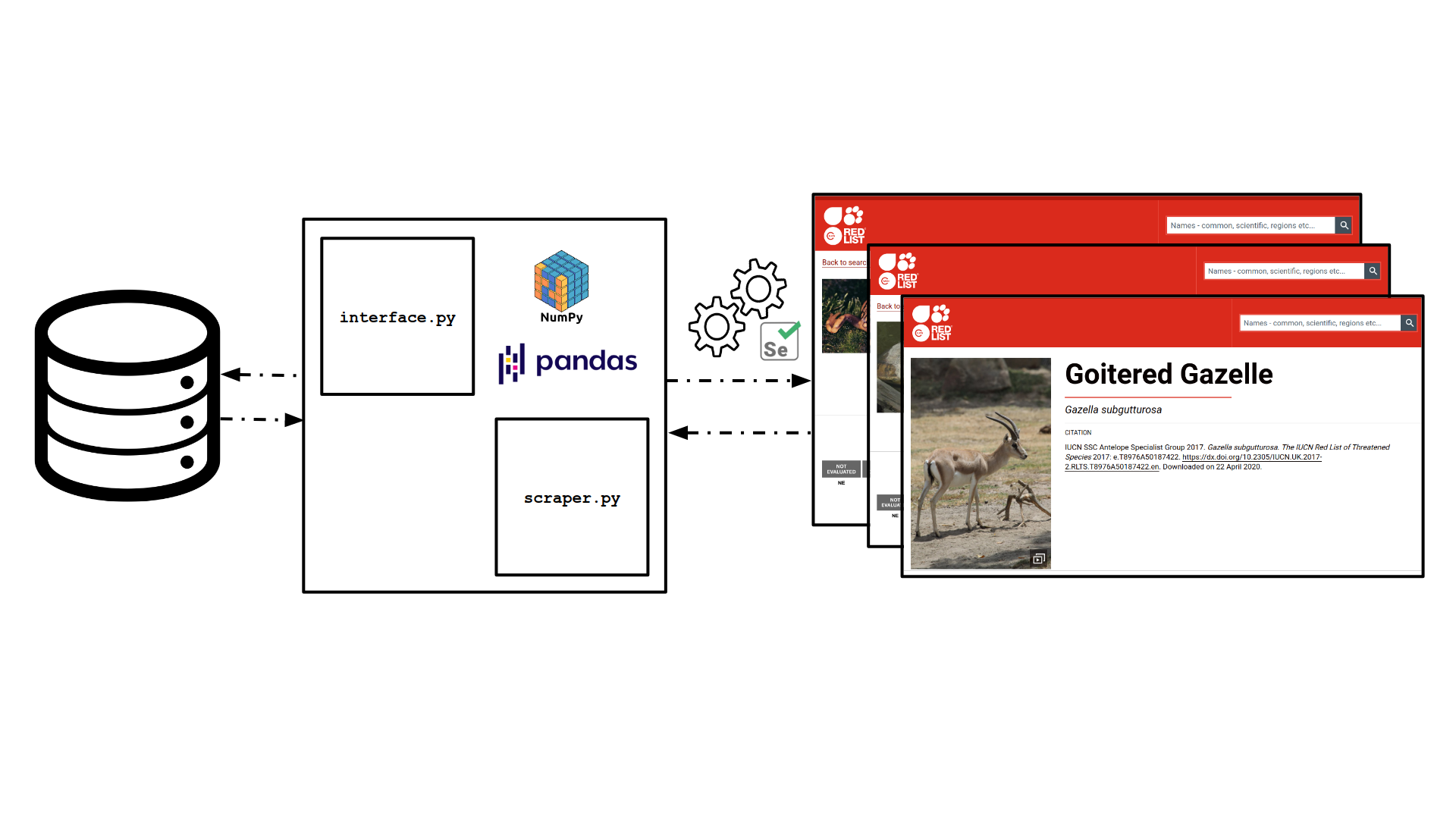

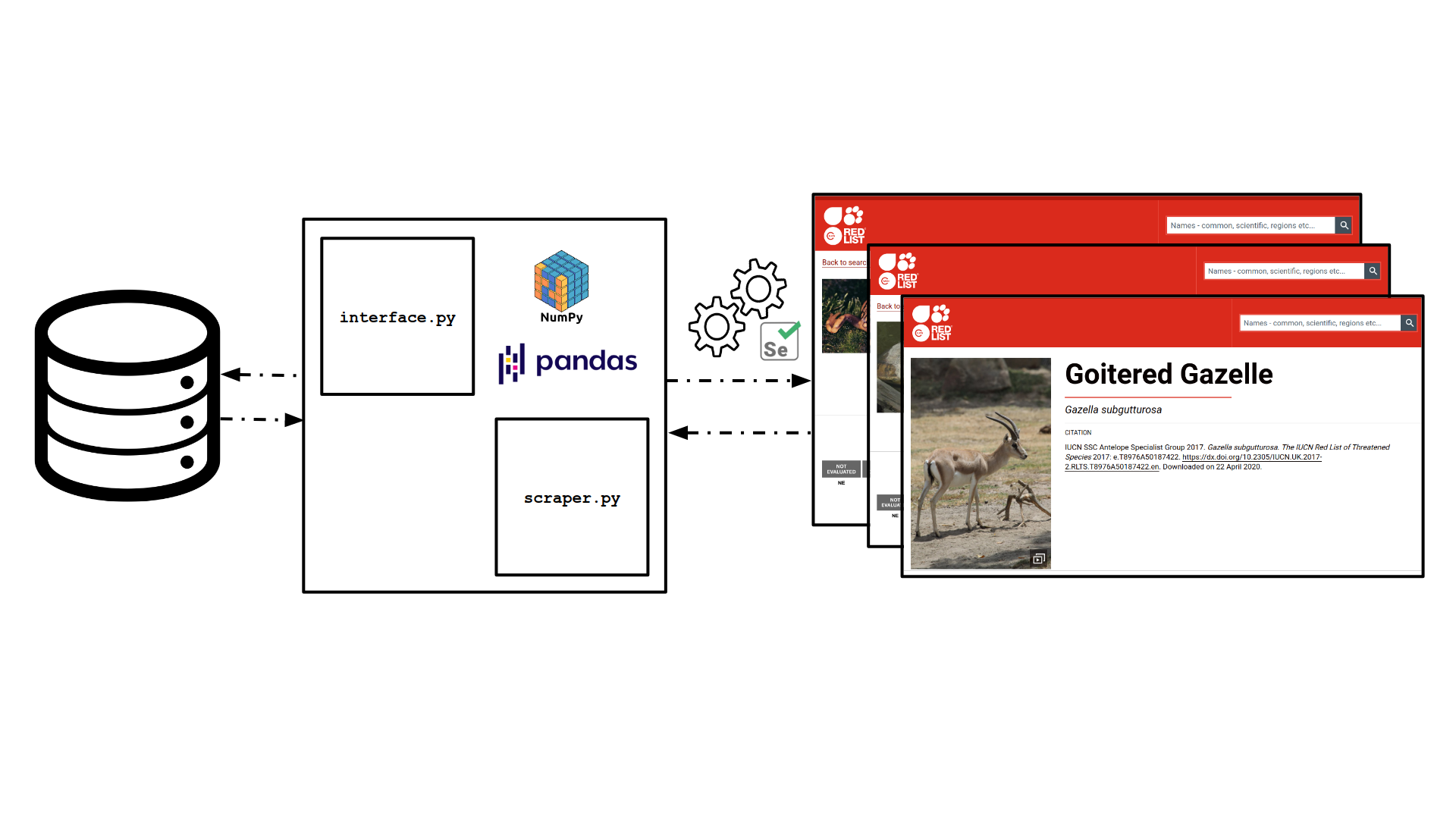

- The model is made of two components: 1.

interface.pyand 2.scraper.py.

Figure 2.1 Model to scrape data from IUCN Red List

Figure 2.1 Model to scrape data from IUCN Red List

-

Disk write/read operations are handled by the

interface.pycode. -

The

pandasdataframe is saved to the disc by theinterface.pycode after each run.

-

The

scraper.pyinteracts with the webpage using the Selenium framework for performance testing. -

The

HTMLtagscontained in thepage_sourcegathered by theSeleniummiddleware code is made searchable usingBeautifulSoup -

The

scraper.pypipeline collects the prescribedHTMLtags from the website queried and updates apandasdataframe with the information. -

The

speciesCounter()of thescraper.pyscript returns thesnoof the last species that’s missing thestable,unknownordeclinepopulation trend tags, which all scrapped species must have.

-

While writing elements to the

pandasdataframe an element maybe right-shifting a column(s). This error may lead to apandasmemory warning, considreing entities of multiple datatypes occupy the same column. -

Some species are not indexed by the IUCN Red List. This may cause the

start.shscript to loop while trying to collect the speciesURLfrom the searchpage.

If you decide to use our client, scraper or cleaner for your project, or as a means to interface with the IUCN database, please cite our 2021 Conservation Letters paper!

@article{mendiratta2021mammal,

title={Mammal and bird species ranges overlap with armed conflicts and associated conservation threats},

author={Mendiratta, Uttara and Osuri, Anand M and Shetty, Sarthak J and Harihar, Abishek},

journal={Conservation Letters},

volume={14},

number={5},

pages={e12815},

year={2021},

publisher={Wiley Online Library}

}

https://github.com/SarthakJShetty/Red

https://github.com/SarthakJShetty/Red

{ "label": "if", "description": "if ($condition) { ... }", "disabled": false, "snippet": "if(${1:condition}) {\n\t$TM_SELECTED_TEXT\n}$0", "languageIds": ["*"] // Wildcard allows snippet to work with all languages }